The 1st Renmin University of China (RUC) Workshop on AI for Social Good will be held online via VooV Meeting on December 20, 2020( Beijing time). The AI for Social Good workshop will focus on social problems for which artificial intelligence has the potential to offer meaningful solutions. The problems we chose to focus on are inspired by the United Nations Sustainable Development Goals (SDGs), a set of seventeen objectives that must be addressed in order to bring the world to a more equitable, prosperous, and sustainable path. In particular, we will focus on the following areas: public health, environmental & species sustainability, disaster risk, humanitarianism, and economic and social welfare.

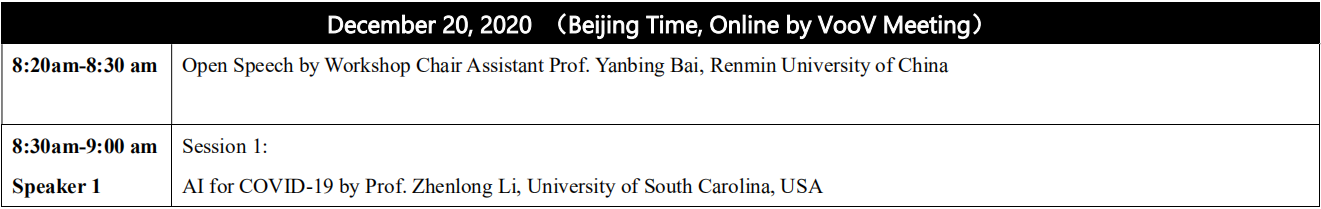

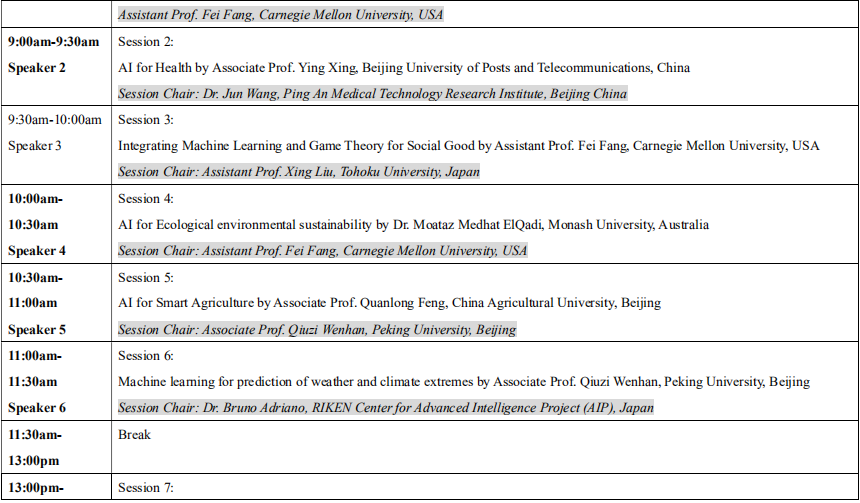

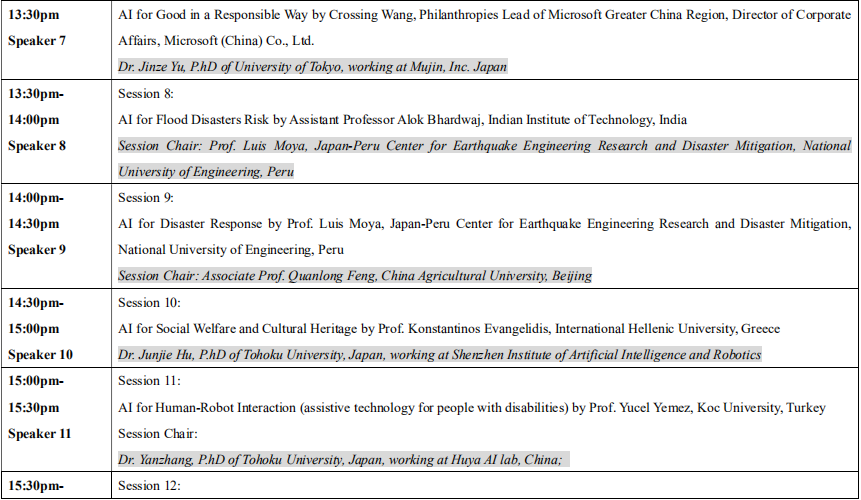

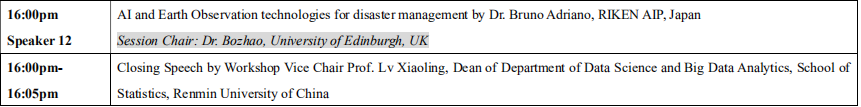

Workshop Schedule

Note: 30 minutes includes a 20-25minute report and a 5-10minute question and answer session

Speaker 1

Title:Fighting the COVID-19 Pandemic with Big Movement Dataz

Abstract

Human movement is among the essential forces that drive spatial spread of infectious diseases. Prediction and control of the spread of infectious diseases such as COVID-19 benefits greatly from our growing computing capacity to quantify fine-scale human movement. Existing methods for monitoring and modeling the spatial spread of infectious diseases rely on various data sources as proxies of human movement, such as airline travel data, mobile phone data, and dollar bills tracking. However, intrinsic limitations of these data sources prevent us from systematic monitoring and analyses of human movement from different spatial scales (from local to global). Big social media data such as geotagged tweets have been widely used in human mobility studies, yet more research is needed to validate the capabilities and limitations of using such data for studying human movement at different geographic scales (e.g., from local to global) in the context of global infectious disease transmission. In this presentation, I will introduce our approaches of using big social media data coupled with other mobility data sources such as SafeGraph`s foot traffic data and Google Mobility report in fighting the COVID-19. The approaches cover different perspectives including extracting population flows from geotagged tweets, developing indices to quantify human mobility, and understanding socioeconomic disparities in mobility patterns, and human mobility data visualization and sharing.

Bio

Dr. Zhenlong Li is an Associate Professor in the Department of Geography at the University of South Carolina (USC), where he established and leads the Geoinformation and Big Data Research Laboratory (GIBD, http://gis.cas.sc.edu/gibd ). He received B.S. degree in Geographic Information Science from Wuhan University in 2006, and Ph.D. (with distinction) in Earth Systems and Geoinformation Sciences from George Mason University in 2015. He is recognized as a Breakthrough Star by USC in 2020. Dr. Li’s primary research field is GIScience with a focus on geospatial big data analytics, high performance computing, spatiotemporal analysis/modelling, CyberGIS, and geospatial artificial intelligence (GeoAI) with applications to disaster management, human mobility, and public health. He has more than 80 publications including over 50 peer-reviewed journal articles. He served as the Chair of the Association of American Geographers CyberInfrastructure Specialty Group, Co-Chair of the Cloud Computing Group of Federation of Earth Science Information Partners (ESIP), and the Board of Director of the International Association of Chinese Professionals in Geographic Information Sciences (CPGIS). Currently, he sits on the Editorial Board of 4 international journals including the ISPRS International Journal of Geo-Information, Geo-spatial Information Science, PLOS ONE, and Big Earth Data. He also serves as a peer reviewer for more than 30 international journals.

Speaker 2

Title:Research on cardiac data based on deep learning

Abstract

At present, the mortality rate of cardiovascular diseases is the main cause of death, which has been a common threat to all mankind. It is significant to explore a fully automatic classification and segmentation algorithm for the clinical prevention and diagnosis of cardiovascular diseases. Our topic will focus on cardiac data based on deep learning theory, which mainly to study the classification task of arrhythmia using one-dimensional ECG signals and cardiac images segmentation task.

Bio

Ying Xing received Doctor degree in computer science and technology from Beijing University of Posts and Telecommunications (BUPT). Now working as an Associate Professor in School of Artificial Intelligence at BUPT. Interested in software testing, computer vision, big data and AI, including research on semantic segmentation, classification of medical images data.

Speaker 3

Title:Integrating Machine Learning and Game Theory for Social Good

Abstract

The rapid advances in AI have made it possible to leverage AI techniques to address some of the most challenging problems faced by society. Many of these problems involve multiple self-interested agents or parties with their own goals or preferences. While game theory is an established paradigm for reasoning strategic interactions among multiple decision-makers, its applicability in practice is often limited by the strong assumptions and the intractability of computing equilibria in large games. On the other hand, machine learning has led to huge successes in various domains and can be leveraged to overcome the limitations of the game-theoretic analysis. This talk will cover our work on integrating learning with computational game theory for addressing societal challenges such as infrastructure security, wildlife conservation, and food security.

Bio

Fei Fang is an Assistant Professor at the Institute for Software Research in the School of Computer Science at Carnegie Mellon University. Before joining CMU, she was a Postdoctoral Fellow at the Center for Research on Computation and Society (CRCS) at Harvard University. She received her Ph.D. from the Department of Computer Science at the University of Southern California in June 2016.

Her research lies in the field of artificial intelligence and multi-agent systems, focusing on integrating machine learning with game theory. Her work has been motivated by and applied to security, sustainability, and mobility domains, contributing to the theme of AI for Social Good. Her work has won the Distinguished Paper at the 27th International Joint Conference on Artificial Intelligence and the 23rd European Conference on Artificial Intelligence (IJCAI-ECAI’18), Innovative Application Award at Innovative Applications of Artificial Intelligence (IAAI’16), the Outstanding Paper Award in Computational Sustainability Track at the International Joint Conferences on Artificial Intelligence (IJCAI’15). She was invited to give an IJCAI-19 Early Career Spotlight talk. Her dissertation is selected as the runner-up for IFAAMAS-16 Victor Lesser Distinguished Dissertation Award, and is selected to be the winner of the William F. Ballhaus, Jr. Prize for Excellence in Graduate Engineering Research as well as the Best Dissertation Award in Computer Science at the University of Southern California. Her work has been deployed by the US Coast Guard for protecting the Staten Island Ferry in New York City since April 2013. Her work has led to the deployment of PAWS (Protection Assistant for Wildlife Security) in multiple conservation areas around the world, which provides predictive and prescriptive analysis for anti-poaching effort.

Speaker 4

Title:AI for Ecological Environmental Sustainability

Abstract

Climate change is threatening animal habitats, ranges, and lifecycles. Understanding these problems requires frequently updated data on planet scale but the spatial and temporal attributes of these data render them expensive and often impractical to collect.

Recent developments in electronic hardware and the accompanying advances in data storage and processing capabilities have given rise to new possibilities of planet-scale datasets, examples range from satellite imagery and remote sensing, to data generated from sensors and cameras, as well as data contributed by human volunteers on large scale. These massive data sources, however, pose unconventional challenges in terms of data quality and requirements for data processing. AI-based methods are showing promise in mitigating these new challenges.

In this talk, we discuss some examples of applying AI-based methods, namely computer vision and classification models, to address emerging issues in relatively new data sources used in ecological research.

Bio

Dr. Moataz Medhat ElQadi got his PhD from Monash University, Australia. He is interested in applying AI to solve problems in the living world. In his PhD, he used geotagged photos available on social network sites to map species distributions in space and time while devising methods for overcoming data quality issues using computer vision and machine learning. His project was awarded a Microsoft AI for Earth Grant and was supported by the Potsdam Institute for Climate Impact Research (PIK) to collaborate with the International Institute of applied systems Analysis (IIASA) in Austria.

Currently, Moataz has joined the Revitalising Informal Settlements and their Environments program, RISE (rise-program.org), an action-research program at the nexus of health, environment, water, and sanitation in both of Indonesia and Fiji. Moataz is working on big data and machine learning challenges faced in this unique program with a focus on acoustic analysis of biotic environments.

Moataz is interested in human and computer languages alike and can be reached at https://www.linkedin.com/in/moatazmedhat or moataz.mahmoud@monash.edu

Speaker 5

Title:AI for smart agriculture

Abstract

Artificial Intelligence (AI) has been widely used in agricultural remote sensing, which provides revolutionized methods for crop classification, yield estimation, etc. This report mainly focuses on the utilization of deep learning models for several agricultural applications, such as greenhouses and mulching films detection from very-high-resolution optical satellite data, crop classification using multi-temporal UAV images, and winter wheat yield estimation based on multi-source data fusion.

Bio

Quanlong Feng received Doctor degree in Institute of Remote Sensing and Digital Earth, Chinese Academy of Sciences (RADI, CAS). Now working as an Associate Professor in China Agricultural University (CAU). Interested in pattern recognition and image analysis, including scene understanding, semantic segmentation, and object detection from very high resolution remote sensing data, especially UAV.

Personal webpage:

https://www.researchgate.net/profile/Quanlong_Feng

Speaker 6

Title:Machine learning for prediction of weather and climate extremes

Abstract

Big data and AI technology are reshaping our world. The combination of diversified data resource, ever-increasing computational power, and recent advances in statistical modelling and machine learning brings new opportunities to expand our knowledge on the Earth system from data. In particular, as one of the major concerns of future climate change, our current understanding of high impact weather and climate extreme events is limited and need to be further improved by developing and adapting tools available from the fields of machine learning and artificial intelligence. This talk reviews recent progresses in weather and climate extremes studies using machine learning methods and discuss interesting challenges to explore in the future.

Bio

Dr. Qiuzi Han Wen is an Associate Research Professor at School of Mathematical Sciences, and the assistant director at the National Engineering Laboratory for Big Data Analysis and Application Technology, Peking University. Before joining PKU, she worked as a Research Scientist at Environment Canada and later founded a science and technology company, HuaNongTianshi, which has provided agriculture data and information services for over a million acres across China. She has published high impact papers and holds several patents on big-data technology and application. Her representative work on detection and attribution of changes in extreme temperatures has been chosen as an "AGU Research Spotlight" and featured on AGU’s EOS magazine. She also led/participated 10+ national/provincial key research and development projects. Her current research interests cover prediction and attribution of weather and climate extremes, emergency and disaster risk Management, data technology in agriculture, etc. Her recent work on epidemic risk assessment has been applied in the prevention and control of regional COVID-19 pandemic in China.

Personal webpage:

https://www.math.pku.edu.cn/jsdw/js/dsjfxyyyjzgjgcsys/99465.htm

Speaker 7

Title:Machine learning for prediction of weather and climate extremes

Abstract

The rapid development of technologies brings challenges and opportunities to all sectors. It’s inspiring that AI is not only applied for business purposes, but also for social good. Since Microsoft established China branch in 1990’s, the company consistently enables nonprofit organizations with technologies. Besides providing technical supports, Microsoft practices Tech/AI for Good in a responsible way by following the Responsible AI Six principles. This talk will share Microsoft China Tech/AI for Good practices and Responsible AI principles.

Bio

Crossing Wang is Philanthropies Lead of Microsoft Greater China Region, Director of Corporate Affairs, Microsoft (China) Co., Ltd.

In this role, Crossing is responsible for Skills for Employability, AI for Good, Tech for Social Impact, Disaster Response, and Employee Engagement. She also works with thinktanks, academia on social policy experiments, researches and advocacy. Crossing has strong passion for social development especially in children/youth issues, education, and rural/urban community development, Tech for social impact etc. She has practiced corporate social responsibilities since 2000, with expertise in program design, implementation and evaluation, and grant management. Crossing also has extensive experience in stakeholder relationships across private sector, government departments, international/grassroots nonprofit organizations, and academia.

Crossing works across teams at the company, designing philanthropic programs which are both based on company’s strengths and aligned with business strategies. Moreover, she partners with communication teams on messages composing and delivering, internally and externally. In 2014, Crossing won a Platinum Circle of Excellence award, which is given to less than 0.5% of employees every year at Microsoft globally. Besides the role of Philanthropies Lead, Crossing also served as the Business Manager of the GCR Lead, Corporate, External, and Legal Affairs for more than three years.

In addition to her work at Microsoft, Crossing is active in sharing knowledge with young people and severing people in need. She lectured Social Work Practice with Children at Beijing Normal University in spring semesters 2012 to 2014. In recent years, she gave speeches to high school students and colleague students from schools such as Peking University, Tsinghua University and universities abroad. Her volunteer experience was broadcasted by CCTV 1.

Crossing rejoined Microsoft in 2011. Before that, she went to graduate school in 2008; worked at Microsoft China and Hewlett-Packard (China)/Agilent Technologies (China) from 1999 to 2008.

Crossing earned Master of Social Work and M.S. In Nonprofit Leadership degrees from University of Pennsylvania School of Social Policy and Practice, and graduated magna cum laude with a concentration in child welfare and international social policies. She was honored with Dr. Estes International Award at the commencement. She also received Dean’s Award 2009 and 2010.

Crossing lives in Beijing with her husband and son who enjoy her cooking very much.

Speaker 8

Title:AI for Flood Disasters

Abstract

Floods are recurring hazards in Asia that cause huge economic damage and claim thousands of lives in the region. Nine of the top fifteen countries globally most exposed to floods are located in Asia. Due to the high population density, estimation of the extent of flooded regions is important for an effective disaster response to save lives. Flood extents can be used by first responders to effectively respond to a flood disaster, and decision makers for hazards/water management in flood-prone areas.

Satellite remote sensing is a potential way to extract flood extents as it provides convenient way to gain knowledge on flood extents. Presently, many satellite sensors can be utilized to extract flood extents not only on current flood extents but also for past events.

‘AI for Flood Disasters’ is focussed on extracting the information on flood extents using the AI technology. In this lecture, I will present the application of the deep learning method of a one- dimensional convolutional neural network (1D-CNN) to Synthetic Aperture Radar (SAR) (a type of satellite remote sensing data) to map flood extents. I will also discuss some of challenges and their possible solutions in the application of AI for flood disasters.

Bio

Dr Alok Bhardwaj is an Assistant Professor in the Civil Engineering Department at Indian Institute of Technology, Roorkee in India. Dr. Bhardwaj completed his PhD from National University of Singapore in 2018. Prior to joining IIT Roorkee as a faculty, Dr Bhardwaj was working as a post-doctoral fellow at the Earth Observatory of Singapore. Dr Bhardwaj is a National Geographic Explorer and is supported by National Geographic Society to conduct flood research in Asia. Dr Bhardwaj’s main research interests include application of remote sensing and deep learning techniques to study urban floods, and understanding the link between climate teleconnections and occurrence of extreme rainfall events and ensuing floods in Asia.

Speaker 9

Title:Fully Automatic Machine Learning Classifiers for Remote Sensing Damage Classification

Abstract

Previous applications of machine learning in remote sensing for the identification of damaged buildings in the aftermath of a large-scale disaster have been successful. However, standard methods do not consider the complexity and costs of compiling a training data set after a large-scale disaster. Here, a number of potential solutions to the scarcity of training samples is reported. The solutions are based on exploiting additional sources of information, such as in-place sensors (i.e. GNSS, ground motion records, tidal gauges), numerical simulations, and risk analysis. As one solution, it will be shown that training samples can be replaced by aggregate’s estimations computed from damage functions (available in advance from risk analysis field). In addition, when damage functions are not available, it has been recently shown that automatic collection of training samples can be performed if the disaster intensity can be estimated.

Bio

Dr. Moya was a Visiting Student with the Building Research Institute, Tsukuba, Japan, in 2012. From 2016 to 2020, he was a Researcher with the International Research Institute of Disaster Science (IRIDeS), Tohoku University, Japan. He received the Alexander von Humboldt Fellowship in 2019. Since 2020, he is a Research Fellow with the Japan-Peru for Earthquake Engineering Research and Disaster Mitigation, National University of Engineering, Peru. He is currently a Principal Investigator of a project that aims to integrate remote sensing, in-place sensors, and numerical simulations for early disaster response. His research interests include earthquake engineering, remote sensing technologies, intelligent evacuation systems, applied machine learning for disaster mitigation.

Speaker 10

Title:AI fro Social Welfare and Cultural Heritage

Abstract

Since smart devices are becoming the primary technological means for daily human activities related to user-location, location-based services constitute a crucial component of the related smart applications. Meanwhile, traditional geospatial tools such as geographic information systems (GIS) in conjunction with photogrammetric techniques and 3D visualization frameworks can achieve immersive virtual reality over custom virtual geospatial worlds. In such environments, 3D scenes with virtual beings and monuments with the assistance of storytelling techniques may reconstruct historical sites and “revive” historical events. Boosting of Internet and wireless network speeds and mixed reality (MR) capabilities generate great opportunities for the development of location-based smart applications with cultural heritage content. This paper presents the MR authoring tool of “Mergin’ Mode” project, aimed at monument demonstration through the merging of the real with the virtual, assisted by geoinformatics technologies. The project does not aim at simply producing an MR solution, but more importantly, an open source platform that relies on location-based data and services, exploiting geospatial functionalities. In the long term, it aspires to contribute to the development of open cultural data repositories and the incorporation of cultural data in location-based services and smart guides, to enable the web of open cultural data, thereby adding extra value to the existing cultural-tourism ecosystem.

Bio

Dr. Konstantinos Evangelidis is Professor of Geoinformatics in the discipline of “Web-centric Information Systems and Geographic Databases”. He currently serves as Head of the Surveying and Geoinformatics Engineering Department of International Hellenic University. His research experience focus on data standardization and interoperability, OGC standards and services, geospatial web services and free and open source geospatial software. Over the last years he has spent significant effort in applied research dealing with the development of 3D geospatial visualizations, virtual geospatial worlds and their convergence with Mixed Reality. He is currently serving as a scientific manager in a European Union funded project aiming to demonstrate cultural heritage resources monuments by merging real with virtual, in a Mixed Reality, assisted by Geoinformation technologies. Before entering University, in 2010, and for over ten years, as the Technical Director and Board Member of a consulting firm he had participated in more than 30 projects and studies in the private and public sector and in European programs. He has published more than 50 papers in high impact journals and referred conferences.

Speaker 11

Title:AI for Human-Robot Interaction (assistive technology for people with disabilities)

Abstract

Social robots are expected to exhibit human-like behaviors and speech abilities for fluid human-robot interaction. We explore the impact of nonverbal backchannels initiated by the robot during an interaction on user engagement. We design and conduct a human-robot interaction experiment where the robot monitors user engagement in real-time and adapts its nonverbal backchanneling behavior accordingly. The engagement is monitored via automatic detection of user gaze, speech and, backchannels such as laughter/smiles and head nods from audio-visual data. The robot is programmed to mirror head nods and smiles of the user, and furthermore initiate them based on the monitored engagement level. The experiments are conducted with a back-projected robot head in a survival story-shaping game, where each decision from the user molds the story. The proposed system is compared with a baseline in which the robot does not display any nonverbal backchannels. The higher values of user engagement obtained with our system support the importance of backchanneling in human-robot interaction.

Bio

Professor Yücel Yemez is the PI of the computer vision lat at Department of Computer Engineering, Koç University. His research is on Computer Vision, Human-Computer Interaction and Multimedia Signal Processing, currently focused on: Deep learning for computer vision; 3D vision and graphics; Shape correspondence; Human-robot interaction; Reinforcement learning from batch interaction data; Affective Computing.

Speaker 12

Title:AI and Earth Observation technologies for disaster management

Abstract

Remote sensing, such as optical imaging and synthetic aperture radar, provides excellent means to monitor urban environments continuously. Notably, in the case of large-scale disasters, in which a response is time-critical, images from both modalities can complement each other to convey the full damage condition accurately. However, due to several factors, such as weather conditions, it is often uncertain which data will be available for disaster response. Hence, novel methodologies that can utilize all accessible sensing data are essential for disaster management. Here, we have developed a global multisensor and multitemporal dataset for building damage mapping.

Bio

Bruno Adriano received the M.Eng. degree in disaster management from the National Graduate Institute for Policy Studies and the International Institute of Seismology and Earthquake Engineering, Building Research Institute, Japan, in 2010, and the Ph.D. degree from the Department of Civil and Environmental Engineering, Graduate School of Engineering, Tohoku University, Japan, in 2016. From 2016 to 2018, he was a fellow researcher of the Japan Society for the Promotion of Science at the International Research Institute of Disaster Science, Tohoku University, Japan. Since 2018 he is a researcher in the Geoinformatics Unit at the RIKEN Center for Advanced Intelligence Project, Tokyo, Japan. His research focuses on integrating machine learning and high-performance computing for disaster management using remote sensing technologies.